Home Theater Geeks 518 Transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show.

Scott Wilkinson [00:00:00]:

In this episode of Home Theater Geeks, I chat with Modi Margalit, CEO and co-founder of Sonic Edge, about a new speaker transducer technology. So stay tuned.

Moti Margalit [00:00:15]:

Podcasts you love from people you trust.

Scott Wilkinson [00:00:20]:

This is TWiT. Hey there, Scott Wilkinson here, the Home Theater Geek. In this episode, I'm going to chat with Moti Margalit, CEO and co-founder of Sonic Edge, a company that's doing some really interesting stuff with a new speaker technology. Hey Moti, welcome to the show.

Moti Margalit [00:00:53]:

Thanks, Scott. It's a pleasure being here.

Scott Wilkinson [00:00:56]:

I'm so glad to have you on. I first learned about your company and your research, uh, in a press release for CES, which was just last month. I didn't get to go and meet you there, but I'm so glad to get a chance to talk with you here.

Moti Margalit [00:01:16]:

I'm glad too for that opportunity.

Scott Wilkinson [00:01:19]:

So we're talking about speaker technology, which for the most part hasn't really changed in what, 100 years or so?

Moti Margalit [00:01:30]:

Even 150 years.

Scott Wilkinson [00:01:32]:

Even 150 years. Let's start with— give us a brief overview of sort of conventional speaker technology.

Moti Margalit [00:01:41]:

Sure. So there's some debate who really invented the speakers, but Alexander Graham Bell is typically credited with it 150 years ago. And basically the technology has stayed the same. You have a membrane moving pushing air, generating sound pressure which travels at the speed of sound, reaches our ears, and we register that as audio. And that technology is basically the same technology if it's loudspeakers in your living room or very small speakers fitting into your earphone or hearing aid. And when you try to make a speaker small, you run into multiple physical problems because of this structure that, that speakers all about. My partner, Ari, who co-founded with me the company, he uses hearing aids. And the common complaint of people using hearing aids is that it's better than nothing, but they don't really hear well.

Moti Margalit [00:02:41]:

They don't hear well in crowds, they don't hear well in noisy environments, and they can't really enjoy music. From that, we started thinking about making a really small speaker.

Scott Wilkinson [00:02:54]:

Yeah, really small, in fact. And so what you did was come up with or use a technology that's been around for a while called MEMS, or MEMS, which stands for microelectromechanical.

Moti Margalit [00:03:12]:

System.

Scott Wilkinson [00:03:15]:

So give us an overview of what this technology is about.

Moti Margalit [00:03:19]:

Sure. So MEMS is a technology that's been around for, I would say, nearly 50 years now. We all know about silicon technology, computer chips, all the electronic chips around us. And in silicon technology, you basically make transistors and other electronic circuits in silicon. MEMS, microelectromechanical systems, is basically making mechanical structures in the silicon. So instead of having transistors, you have, for example, membranes. You can have different kind of structures. And these devices make, for example, accelerometers used in the crash detection in cars to deploy airbags, used in your cell phone to detect the various motions.

Moti Margalit [00:04:09]:

Other uses of MEMS technology is for microphones. So in your cell phone, you would have 4 MEMS microphones basically picking up the voice and translating that into electronics. So MEMS is a technology where you make a mechanical structure, typically combine it with some electronic pickup or electronic actuation, and then have an interface between the electronic world and the mechanical and the physical world.

Scott Wilkinson [00:04:39]:

The mechanical parts are super tiny, right?

Moti Margalit [00:04:44]:

Yes, that's why they're called microelectromechanical systems. So the size of these structures is typically, let's say, smaller than the width of a hair. So you're looking at structures which are very small and can provide various types of functionality.

Scott Wilkinson [00:05:06]:

And so how does this get applied to a speaker driver or transducer?

Moti Margalit [00:05:15]:

So actually, MEMS by itself is not enough. Like we said, MEMS technology has been around for 50 years. And back in 2000 or so, a company called Knowles basically developed the MEMS microphone. So MEMS microphone is a membrane which when it sees pressure changes, it vibrates and then translates those vibrations into electronics. Now, from the minute that people invented the MEMS microphones, and today, you know, 8 billion of these MEMS microphones are being used in every year in cell phones, cars, laptops, basically everywhere. So from the minute the MEMS microphone was invented, people were trying to make a MEMS speaker because the the way— same way that a microphone picks up changes in the air pressure, a speaker generates those changes in the air pressure. But it turns out that making a MEMS speaker was a very big challenge. The reason for that is that the two things that are important for a speaker are basically impossible to do in a MEMS structure.

Moti Margalit [00:06:27]:

A speaker needs, one, to move a lot, and two, it needs to have a very low resonant frequency. If you consider, let's say, your subwoofer, the resonant frequency, the frequency which moves the most, is something like 50 Hz. A standard speaker in an earphone has a resonance of about 300 Hz. So you are looking to make a structure which is basically made of silicon, a very tough material,. And you want it both to move a lot and you want it to be very compliant, easy to move, just like paper or plastic. And that thing really didn't work out. So back in 2015, the first MEMS speakers arrived on the scene. But because of these physical limitations, those speakers were really limited just to the high frequencies.

Moti Margalit [00:07:24]:

They were tweeters. They were good for making 8 kHz, 9 kHz, high frequencies. Frequencies and not what is called the full-range speaker. So something that can start from very low frequencies going up all the way up to high frequencies. These tweeters were, they saw limited market success and they were used in a hybrid solution. So you took a standard speaker, you combined it with this tweeter and you put everything into an earphone, which makes for a complicated, expensive system. But the goal was to enhance the bandwidth of a typical earphone. Again, coming back to why not make a small speaker? If you look at your earphone, the speakers there are typically somewhere between 10 to 12 millimeters in diameter.

Moti Margalit [00:08:13]:

They have, like I said, a low resonant frequency, so they're pretty good on low frequencies, but then they typically taper off at the high frequencies around 8 to 10 kHz, even the really high-end speakers start to taper off. And this means that when you're looking, listening to music, and when you're trying to really have a hi-fi quality with earphones, you don't get the same experience as you would in, in real life. Or if you are looking at the high-end stereo or high-end over headphones, which give you a frequency response all the way up to 20 and some cases high of kilohertz.

Scott Wilkinson [00:08:58]:

So there was a limitation to these MEMS or early MEMS speakers. And so you and your partner decided to try and address those issues, right?

Moti Margalit [00:09:11]:

Exactly. So it was not enough just to try to make a MEMS speaker. We really needed to go back to first principles and try to understand how to use the MEMS technology to make speakers. And like I said, we went back to first principles and asked ourselves, what can we do with a really small speaker? So the same way that the subwoofer is large, a tweeter is a much smaller speaker. If we're looking at really small speakers, basically the width of a hair, as we can easily make in, in MEMS structures, The best thing this speaker can do is generate ultrasound. What is ultrasound? So the same way that you have sound and that's varying high and low pressure in air traveling at the speed of sound at rates up to 20 kHz, 20,000 times, 20,000 cycles a second. That's sound. Ultrasound is anything above it.

Moti Margalit [00:10:11]:

And the smaller the speaker, the higher the ultrasound needs to be at. We work at 400,000 cycles a second. That's the ultrasound we work at. Wow.

Scott Wilkinson [00:10:22]:

Those frequencies— 400 kilohertz.

Moti Margalit [00:10:25]:

Exactly. Those frequencies, we can't hear anything. Even bats would be challenged to enjoy that kind of music.

Scott Wilkinson [00:10:35]:

Bat earphones. I like it.

Moti Margalit [00:10:39]:

So we need somehow to take that ultrasound and make it into sound. In electronics, we call that modulation or demodulation. The same way that the AM radio is operating at high frequency RF waves, but then you demodulate it at the radio to generate audio frequencies from those radio frequencies. So in essence, we were trying to make a demodulator which would work in the acoustic domain, and that was really the basis of our invention— a speaker that is generating ultrasound and then transforming that ultrasound into sound by demodulation. The way we actually built it— and remember, everything in our structures is tiny— we have On the one hand, a small membrane generating the ultrasound, and then the ultrasound goes through an acoustic channel which we can open and close the acoustic channel. Now consider an acoustic channel. If I block my mouth, you can't hear me. So the same way we block and unblock the speaker, you can think of that.

Moti Margalit [00:11:53]:

I mean, keep in mind we are saying that ultrasound is high and low pressures coming out very quickly. So we have all the high and low pressures. We just need to time them. What the modulator does is pick the right pressure at the right time. And by doing that, we generate sound from ultrasound.

Scott Wilkinson [00:12:14]:

So that's really— sorry, you generate sound in the audible range from ultrasound, which is outside the audible range.

Moti Margalit [00:12:24]:

Yeah, exactly. So, but by modulation, we generate the sound.

Scott Wilkinson [00:12:30]:

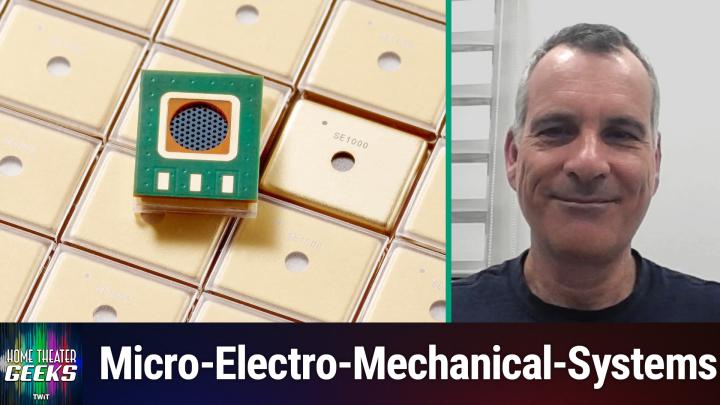

Yeah. So I want to show people a couple of pictures of the MEMS driver that you have developed. Here's one we're looking at. This is basically a chip. It's difficult to get size from this picture, but it's basically a little computer chip, right?

Moti Margalit [00:12:47]:

It's a mechanical chip.

Scott Wilkinson [00:12:49]:

Mechanical chip.

Moti Margalit [00:12:50]:

No transistors in it, but it has a lot of these membranes. It has something like 1,000 of these very small membranes which generate the ultrasound and then modulate it.

Scott Wilkinson [00:13:04]:

And the next picture I think has a little bit more size. What is this? This is one of your chips or one of your transducers. What is it sitting on?

Moti Margalit [00:13:14]:

So it's— what you can see is basically the both sides of the transducer. On the one side, you will have the electrical contacts. Those are the 3 gold pads on the bottom. The audio is coming from that grid in the middle of the square.

Scott Wilkinson [00:13:35]:

Yeah.

Moti Margalit [00:13:35]:

And from the top side, we also have audio output, you know, the same way that the speaker has sound coming out from both sides. So that, that hole is another acoustic aperture. So what you're seeing is the chip from both sides.

Scott Wilkinson [00:13:54]:

Oh, I see, I see. Okay, and, and so all of these squares are, are just multiple copies of this chip? Exactly. I see. Okay, got it.

Moti Margalit [00:14:06]:

Um.

Scott Wilkinson [00:14:09]:

Okay, so let's talk about this modulation from ultrasound to the audible range. How is this accomplished exactly?

Moti Margalit [00:14:24]:

So, as I— basically, the ultrasound has all the pressures we need. It's high pressures, low pressures. The problem is they're coming out too quickly. They're coming out at an ultrasound rate at 400 kHz. Audio is much lower frequency. So basically what we need is to choose the right pressure at the right time, and we do that by having this acoustic gate. So the same way that I'm picturing, you know, blocking my, my mouth— when I blocked my mouth, the ultrasound, the, the audio is attenuated, the ultrasound is attenuated. So by blocking, I prevent the output of the time-varying pressures.

Moti Margalit [00:15:12]:

I pick just the right pressure at the right time. So in comes 400 kHz, out of the modulator comes 1 kHz or 2 kHz or high-quality music.

Scott Wilkinson [00:15:27]:

We have a graphic that sort of illustrates this a little bit, number 3.

Moti Margalit [00:15:34]:

Exactly. So what you can see here on the bottom is the ultrasound speaker. That is moving, f1, the frequency of movement is, let's say, 400 kHz. The ultrasound travels through that aperture that you see in the middle membrane, what's depicted in the green line in the middle, moves through that aperture, and then it comes out at the position between what's called the modulator and the middle membrane. So the ultrasound will basically flow out in that point. The modulator is moving at the second frequency at f2. Now imagine the modulator is moving down all the way to the middle membrane. So it is basically blocking that aperture.

Moti Margalit [00:16:25]:

When it's blocking the aperture, no ultrasound goes through. So you have zero pressure at the output. When the modulator is fully open, the ultrasound will go through. Whatever pressure the ultrasound provides will be coming out the same at that time. In principle, what we have is that the ultrasound frequency is, let's say, 400 kHz. The modulator frequency would be 401 kHz, and the output of this would be a 1 kHz signal. So what you get is the frequency difference between the modulator, and the ultrasound speaker comes out as an audio signal.

Scott Wilkinson [00:17:04]:

So really what we're talking about is the difference between the frequency of the ultrasonic transducer and the frequency of the modulator, which are both in the ultrasonic range, but the difference between them is what's important that gives you the actual audible range, right?

Moti Margalit [00:17:22]:

Exactly.

Scott Wilkinson [00:17:24]:

So is the Is the ultrasonic transducer, the MEMS transducer, is that vibrating in a complex waveform, or is it the modulation that's changing in a complex waveform way? Do you understand my question?

Moti Margalit [00:17:47]:

I understand your question, but let me with your permission, digress for a second because before we address the modulation part of it, I want to highlight something which actually took me about 2 years to come up with a good explanation because my original background is actually in lasers and electro-optics. And when I started the tinkering with audio and I came to people and did the demo and generated sound from this concept, I said, okay, this is interesting. But then they started asking me, okay, but in audio, you need to move air, right? You have big membranes, they move, air is moving. How can you move air efficiently with such tiny membranes? And it took me a while to find a good answer. But that answer really hits on the big difference of this speaker and any other speaker. Our structure is not only an ultrasound and demodulator structure. Looking at it physically from a slightly different perspective, what we have here is a very high-speed pump. Consider what we call the, the speaker is pushing air.

Moti Margalit [00:19:09]:

It's pushing air against the modulation element. When the modulation element is open, Air is pushed and moved out of the speaker. When the modulation element is closed, the air will not be pushed further. Remember, we are working at 400,000 times a second, so the timing between the movement of the membrane and the movement of the modulator determines essentially if air is being pushed forward or if it's being pulled back. And what we want to do is move air. So a standard speaker, let's say working at 1 kHz, 1,000 times a second, is moving a membrane, pushing air 1,000 times a second, pushing and pulling back the air. Our speaker is working at 400,000 times a second, and we can efficiently determine if air is pressure is being built up just by the timing between the two membranes. So essentially we're replacing the membrane, the size of the membrane, with the speed of the air pump.

Moti Margalit [00:20:22]:

So in, in principle, we have 400 times the advantage because we're working at 400 times higher frequency than the standard speaker. So we can make the speaker smaller, we can make the movement of those tiny membranes much less, but you're still moving more air than a comparable speaker. So replacing size for speed is really the key why we can make a much smaller speaker. And this also holds the key to how we actually go about generating sound from this device. You can— I'll do another transgression, but Maybe you have heard of the Class D amplifier.

Scott Wilkinson [00:21:08]:

Sure.

Moti Margalit [00:21:08]:

What is the Class D amplifier? It's essentially an electronic device that instead of just taking your current or voltage and multiplying it, it works at a very high frequency. So it, instead of just multiplying the number of pulses you actually generate in a short interval of time, determine if you're going to have a high voltage or a much lower voltage. The high— the large number of pulses is going through a low-pass filter, and that is driving your standard speaker. Why do I make this analogy? Because you can also think of our device as an acoustic Class D amplifier.

Scott Wilkinson [00:21:53]:

Wow, that's cool.

Moti Margalit [00:21:56]:

Exactly. So We are generating sound pulses and our ear is the low-pass filter. It is getting rid of all those ultrasound signals and just picking up the low-frequency content, which is the audio we're generating.

Scott Wilkinson [00:22:12]:

Got it. Got it. The analogy to a Class D amplifier is very good. I hadn't thought of that before. That's great. And that also then relates to the question that I had, which is Music is not just at a single frequency, it's at a bunch of frequencies at once at the same time. It's a complex waveform. And so I'm trying to— I guess then the relationship between the ultrasonic transducer and the modulator can be a complex waveform.

Moti Margalit [00:22:51]:

It can be, but it doesn't need to be. Keep in mind the analogy I made earlier that this is a mixer. So in principle, if I feed the speaker with an audio signal, A, multiplied by a carrier, by ultrasonic carrier, so I have A times cosine vibrating at a high frequency at 400 kHz. That goes to our ultrasound speaker. The modulator or demodulator is basically— its role is to take out the A from that equation. So if the demodulator is now also operating at the same cosine, it's same frequency as I used in the speaker, I basically have A times cosine times cosine. And cosine times cosine actually is 1 plus sine squared. So we have the A going out of the system, we hear it, and we also have other elements which are high frequency elements, which we don't hear because we simply don't register anything above 20 kHz.

Moti Margalit [00:24:05]:

So it's not such a complex signal that needs to be generated.

Scott Wilkinson [00:24:09]:

Okay, all right. Uh, well, we got into a little math there, and I'm sorry for those of you who, uh, who aren't mathematically inclined, but, um, that's just, just a little, little piece. Uh, now, one, two of the graphics that, that you sent me are, um, showing an acoustic model and an electrical model of the system. Here's the acoustic model. And here's the electrical model. And if you could just touch a little bit on these and tell us what, what we're looking at.

Moti Margalit [00:24:43]:

Okay. So what we see here is basically our structure. The membrane's top and bottom membranes vibrate. When they vibrate, they move air which exists in the middle cavity. They generate pressure. And that pressure pushes out the air through the two air channels. Now imagine the top membrane moving up and the bottom membrane also moving up. The top air channel will be much larger than the bottom air channel.

Moti Margalit [00:25:17]:

So when the pressure is generated in the middle cavity, the most of the air will flow out from the top air channel because it is larger. The acoustic impedance there is lower, so it will simply flow out there, and much less air will flow out from the bottom channel. That means we are generating more flow in the top. Conversely, if we have the opposite situation, more air will flow at the bottom. And you can see that the size of the air channels is really defined by the movement of the membrane. That is what is causing the parametric effect the, the demodulation effect that we expect. Another way to look at this structure, if you can go to the next slide, this is what is called the lumped element acoustic representation. Basically, we are translating the acoustic structure into a comparable electrical structure.

Moti Margalit [00:26:19]:

The arrows, the green and, and yellow circles with arrows in them, they represent the membranes. What does a membrane do? It generates airflow, and in the lumped element representation, the airflow is equivalent to current. So these are current sources which change according to the movement of the membrane. The cavity is what is represented as a capacitor because a cavity has very similar functionality as a capacitor. When current flows into a capacitor, we have voltage. Voltage is the pressure, and like I said, airflow is the current. So air flows into a cavity, we have a buildup of pressure, and in the electrical representation, current flows into the capacitor, we have buildup of voltage. The voltage is then divided between two impedances.

Moti Margalit [00:27:18]:

We have the impedance for the top, ZT, and the impedance for the bottom, ZB.

Scott Wilkinson [00:27:29]:

Sorry, just one second. The language and accent difference, I think in English you would mean impedance.

Moti Margalit [00:27:40]:

Impedance, yes. Thank you very much. Okay.

Scott Wilkinson [00:27:43]:

Just want to make sure everybody understands.

Moti Margalit [00:27:46]:

Sure. Thanks for that. So the impedance basically changes in time. Those acoustic channels that are changing are changing the acoustic impedance, impedance, and those are represented by those arrows over the two top and bottom impedance.

Scott Wilkinson [00:28:09]:

Yes.

Moti Margalit [00:28:11]:

And the ratio between them is what determines if we have a current or it's a comparable airflow going out from the top or going out from the bottom. If you plug in the, the, the movement of the membrane into this electrical structure, what you find is, is exactly what, what I mentioned earlier. If one is working at 400 kHz, the other at 401 kHz, the output of this circuit will be a current moving at 1 kHz. Current moving at 1 kHz is the equivalent of an acoustic wave at 1 kHz.

Scott Wilkinson [00:28:59]:

Yes, very good. Very good. There's also another circuit diagram I wanted to show people, and we're getting pretty geeky here, but hey, it's home theater geeks. That's the name of the show, so let's get geeky. The next diagram is a circuit diagram, more specific. Tell us what we're looking at here.

Moti Margalit [00:29:22]:

Okay, so this circuit diagram is basically another way of looking at the speaker. The top circuit diagram is how you would typically represent a standard speaker, the same technology for the last 150 years. And what you can see here is basically 3 circles. The first circle from the left, that is the electrical circuit. So essentially that describes how the input voltage, Ui, the input voltage to the, to the speaker is translated in the second circuit. It is translated to mechanical movement. So the circle in the second, in the middle, is basically a circuit describing the mechanical movement of the speaker. And the circuit on the left, on the right-hand side, is how that membrane, the mechanical movement of the membrane, is then translated into an acoustic signal.

Moti Margalit [00:30:31]:

So these three circles represent the three areas in which a speaker works— mechanical, electrical to mechanical to acoustic. And these parameters are defined by the parameters of the speaker. This, of course, is rather complex, and this is the reason that you actually need to invest a lot in the design of speakers, of their cabinets, and of the acoustics around them.

Scott Wilkinson [00:31:01]:

Yeah.

Moti Margalit [00:31:02]:

Our device, as I mentioned earlier, is a pump. A pump generates airflow. And what we try to represent in the bottom picture is that the input signal, which is the audio signal, is simply translated into airflow. There's no complex dynamics which are determined by any of the acoustics around the speakers. Those tiny membranes, you can think of them as a digital speaker. They simply translate one-to-one. Whatever you put in, they put out. That is why we can get very good quality audio from these speakers, very consistent.

Moti Margalit [00:31:48]:

And very scalable.

Scott Wilkinson [00:31:52]:

It's quite amazing, actually, how this works. Now, in addition to the transducer itself, it needs to be attached to something, which I believe you call an acoustic coupler, right?

Moti Margalit [00:32:16]:

So our initial, the technology we have developed, we start with earphones. That's the low-hanging fruit. I mean, it's a huge market and one where we bring a lot of benefits. But the earphone basically is attached into the ear or around the ear. And the ear has specific acoustics which we need to mimic. The coupler, you can think of it as an ear simulator. It has the same acoustic response as the ear so that when we develop our speaker or when we develop an earphone using our speaker, we basically use a coupler to mimic the ear and optimize the performance for the earphone.

Scott Wilkinson [00:33:04]:

I see. So the coupler is actually in the development process.

Moti Margalit [00:33:11]:

Exactly.

Scott Wilkinson [00:33:11]:

And it's, it's, it's simulating what happens when that, uh, speaker gets put in an ear canal.

Moti Margalit [00:33:19]:

Exactly.

Scott Wilkinson [00:33:20]:

Okay. All right, I got it. Now, your white paper— you mentioned this before, and I wanted to get an explanation for this— your, your white paper mentioned two different, uh, models or methods of analysis. One is called a finite element, and one is called lumped element, and I don't know what lumped element is. Can you explain that and finite element analysis?

Moti Margalit [00:33:46]:

Sure. So lumped element is basically what we described earlier. That means taking the analogy of acoustics and translating it into electrical circuitry because people have invested so much in the simulation of electrical circuitry. It is very, let's say, whatever we do in electronics, we can do in acoustics. So we said airflow is the current source, impedance is a resistor, or volume is a capacitor, a channel is an inductor. So we have all these analogies. And these are lumped elements. So instead of having, let's say, a long tube, we have just one electronic component which resembles the same functionality as the long tube.

Moti Margalit [00:34:45]:

So that is why it's called lumped element. And it's a good way to make a high-level assessment of an acoustic circuit. And like I showed earlier, that is the common way that you would analyze a speaker. However, with the, you know, this was good in the '40s and is used still today. But as the performance of computers becomes more accessible and we can do more and more simulations, the finite element model is a real physical description of the physics around the speaker. So it can be around the speaker, it can be the acoustic channels. And then it takes into account phenomena which is not always fully described in the lumped element. The lumped element is an average look.

Moti Margalit [00:35:39]:

It does not, for example, take into account effects like viscous effects, which means that if you have air moving in a narrow channel, whatever the air in contact with the channel itself will not move. The air in the middle moves. So the airflow in a channel is not the same velocity across the cross-section.

Scott Wilkinson [00:36:02]:

Got it.

Moti Margalit [00:36:03]:

The lumped element, you would say, ah.

Scott Wilkinson [00:36:05]:

It'S absolutely the same, it's close enough.

Moti Margalit [00:36:08]:

It'S close enough, you know, engineering, it's close enough. But if you want really to, to look into more details and to do a better simulation and a better design, then you do a finite element and In recent years, many tools have gone into that. And in some of the graphs that you see in the white paper, you can see that, for example, for low frequency, lumped element is good enough. But when you go to higher frequencies, you're missing out characteristics that only appear in the more detailed simulations.

Scott Wilkinson [00:36:42]:

Right. For example, so here's a frequency response chart that shows the lumped element estimation and the finite element estimation, and, and they're roughly the same in the low frequencies all the way up to, uh, looks like 5 kHz or so. Yeah. And then they diverge.

Moti Margalit [00:37:07]:

Exactly.

Scott Wilkinson [00:37:09]:

And you see these really sharp peaks in the FEM finite element model, which I I assume is a more accurate representation of what's going on, right?

Moti Margalit [00:37:21]:

Indeed. What you see here, and again, this is the coupler model. The coupler, this is again a question of history. In the past, the original couplers were good for up to 8 kHz. Why? Didn't really need the small speakers to work above the audio for telephones, you know, the, the wired telephones for those who remember those early phones. 4 kHz was all you needed. Yeah. And the couplers were designed for those.

Moti Margalit [00:38:00]:

As the microspeakers became better and better and you really wanted to reproduce a better quality sound, you needed couplers that go to higher frequencies. And the coupler that is simulated here is one of the older couplers, and that coupler is trying to mimic the ear canals with mechanical structures made of metal. The ear is made, of course, of bone and skin. And so the ear damps those high-frequency resonances while the coupler basically has a very high resonance embedded in it. So this is an artificial thing. It is not something that would be reproduced in the ear.

Scott Wilkinson [00:38:50]:

I was going to ask about that. I was going to say, wow, that would probably sound very harsh, but it's not something to worry about, huh?

Moti Margalit [00:38:58]:

It's not something to— it's an artifact. It's not something that is real. But what I would highlight is really the fact that above a certain frequency, in this case, like you said, 6-7 kHz, you see that the finite element basically says that the acoustic signal does not drop off as we expect from the lumped element, but rather has a more flat response. And that response actually goes up to very high frequencies, 20, 30, 40 kHz. This specific design, you can see that it has two of these peaks, one at around 400 kHz, 300 kHz, and another slightly above 1 kHz. And these are— again, we introduce these acoustic resonances for various reasons. This is just a a demonstration that even though our speaker is based on totally new physics, we can well represent it within the existing models of acoustic design. So both for the lumped element and for the finite element, we represent our speaker as a current source, and then it simply gets you the acoustic output of an acoustic signal driven with this current source.

Scott Wilkinson [00:40:27]:

So my next question is this: you have two white papers. One of them is explaining the technology, and it's, it's very good. And the other one is talking about concerns with modulated ultrasound and how to, how to address them. What are these concerns?

Moti Margalit [00:40:53]:

So if you go to literature, there are limitations on the acoustic output. These limitations start from the workplace, from machinery. Even when you play music, you have a label, don't play the music too loud, or if you go to a concert. Because loud music will damage your hearing. It turns out it's not just the sounds that you can hear, but also the ultrasound, but typically the lower frequency ultrasound. So 20 kHz, 40 kHz, 50 kHz. People have reported feeling dizzy, feeling, having headaches, and even there are some reports of temporary hearing loss when subjected to ultrasound. As a result, there are regulations on the use of ultrasound or the exposure to ultrasound, the same that are regulations on exposures to noise.

Moti Margalit [00:41:59]:

So if you're working in a noisy environment, you're supposed to wear hearing protectors to protect your hearing. The regulations on ultrasound they are in place and basically they limit or express concern about ultrasound up to frequencies of 100 kHz. Beyond the 100 kHz, the ultrasound that is emitted has no impact on the ear. Okay, so our ear has elements in it that are sensitive to either the basic tone or the undertones of ultrasound up to 100 kHz. Beyond that, all the literature that exists today says there is no problem with hearing of ultrasound. However, above 100 kHz and much higher frequencies, we have another source of ultrasound in the world, and that is the medical ultrasound. Can be for various diagnostics, testing, and Imaging, imaging, bone healing. So ultrasound is not a new thing in the world.

Moti Margalit [00:43:14]:

You know, even in cars, like the sensors around your cars use ultrasound to make sure that you're not hitting something else. So above 100 kHz, the limitations are mainly on the amount of energy the ultrasound has, because if the energy is very, very high, like for example, in extreme cases of medical imaging, maybe you will heat up, heat by temperature, because the ultrasound is absorbed in the skin and you may cause some heating up. We're talking about many watts of ultrasound. There are treatments where we use ultrasound, for example, for gallbladder, for other applications. Those are very powerful ultrasounds. In comparison, our ultrasound is 400 kHz, so it's totally safe from a health, from a hearing perspective. And from a power perspective, it is 4 orders of magnitude. So we're talking about microwatts.

Moti Margalit [00:44:23]:

This is less power than you have in your, let's say, Bluetooth or Wi-Fi connection. Much less than you have in them. And as a result, those, you know, you don't heat anything up. There's really no danger. Having said all that, we understand that there is a concern. You know, it's a new notion people have. Some people still have concerns over the electromagnetic radiation we're exposed to. And as a result, we published a second white paper where we demonstrated That's very simple existing solutions which are used for protection of acoustic devices.

Moti Margalit [00:45:04]:

Basically, in your earphones today, there is an acoustic mesh protecting the speaker and microphone from your sweat in the ear, from other dust and other contaminants. And what we basically demonstrated is that acoustic mesh attenuates the ultrasound. So if you want, if you have, let's say our customers or companies making earphones and their customers are of course the consumers, if there is concern about ultrasound, you can apply one or more layers of this acoustic mesh and get rid of the ultrasound to whatever level you desire. So even though these speakers work by a totally new principle, They do emit ultrasounds. These ultrasounds are totally benign, very low power, very high frequency, no issue whatsoever. But if there is any cause for concern, you apply a mesh, which in any case you would apply to protect against water and the humidity. And then you also get rid of the ultrasound completely. And that was the purpose of that second white paper.

Scott Wilkinson [00:46:17]:

Got it. We have a couple graphics to show. I'm going to skip over a couple here to number 11. So here we have the resistance and the impedance of the mesh in, in, in the— that you were talking about, the acoustic mesh. And then the next one shows the frequency response when you add that. And the comparison chart is with and without the, the resistive or the mesh. Isn't that right, Modi?

Moti Margalit [00:46:49]:

Yeah. What you can see is the mesh also actually smooths out. So the design of this, this is an earphone. And basically, we introduce in the earphone a tube, and the tube has a resonance. And that is what you see, the peak. Around 4 kHz in the graph on the left.

Scott Wilkinson [00:47:15]:

Yeah.

Moti Margalit [00:47:17]:

When we introduced the mesh, the mesh actually smooths out that resonance. And that is what you see on the right-hand side. The peak is gone. But you can see that aside from removing the peak, and that actually is important Because like you said earlier, these peaks make for a house sound. And then it's how to actually equalize them. So we get rid of that peak, but we still have the nice frequency response at the higher frequencies. And what we are trying to impress here is that actually there is no acoustic loss. As a result of that, introducing that mesh.

Moti Margalit [00:48:04]:

We get rid of the peak, which is something we would want to do anyhow. But there is no loss at the low or high frequencies, which means that even though we're introducing this acoustic mesh, even though we are reducing the ultrasound, there's no negative impact on the audio.

Scott Wilkinson [00:48:23]:

On the audio output, yeah. I believe the other pictures we have show some attenuation as a function of mesh thickness. So as you get thicker and thicker, you get more attenuation, which makes total sense.

Moti Margalit [00:48:36]:

Yeah, So, but actually what we are showing here is the attenuation of the ultrasound portion. So this is actually what we want to show, that even for a relatively thin mesh like 0.3 micron mesh, we're getting 30 dB of attenuation. So 30 dB, 20 dB means 10 times less attenuation. If we have 30 dB, that's like 30 times lower ultrasound. Right. So if you have any concerns about the ultrasound, apply a 0.3 millimeter mesh and you are going to get rid of times 30 of the ultrasound.

Scott Wilkinson [00:49:23]:

So again, that's, uh, to address any concerns that somebody might have about ultrasound getting through. Yeah, exactly. Um, okay, well, at CES you introduced, um, or you announced, I should say, uh, that you have a, a partnership with semiconductor manufacturer. I assume that that is in order to ramp up your production capabilities and doing your technology at scale, right?

Moti Margalit [00:50:03]:

So actually, that we did not announce. Let me digress for a second. Please. So MEMS, microelectromechanical systems, are manufactured in a semiconductor fab, in a silicon fab, much like an electronic chip is manufactured. So we as a company are what's called fabless. We don't have our own billion-dollar manufacturing facility, but rather we do the design, we do simulations, and then we manufacture them at a manufacturing partner. We receive the parts, we test them, and we ship to customers. We have in place a manufacturing partner.

Moti Margalit [00:50:46]:

This is a very high-volume MEMS fab which can manufacture hundreds of millions of MEMS speakers a year. And that is work that's been going on for the last 2 years, and we are ramping up to production in 2026.

Scott Wilkinson [00:51:05]:

Got it.

Moti Margalit [00:51:06]:

In addition to the MEMS speaker, we have a, an amplifier, basically something that receives an audio signal and translates that audio signal into the special drive signals we need for our speaker. That is a standard electronic chip manufactured in a standard electronic fab, and that again is something that we have up and running and providing to customers.

Scott Wilkinson [00:51:33]:

So what was the announcement at CES then?

Moti Margalit [00:51:35]:

Exactly. So when you look at an earphone, our electronic amplifier is connected to the brains of the earphone. The earphone brains has a Bluetooth connection to the outside world. It is connected to microphones. It does audio processing. It can do transparency mode, ANC, all the functionality that you want from from your earphone is done in that brains. And those— there are a couple of companies making those brains, as well as some companies making them themselves. And then it's a question of how our amplifier integrates with this, the brains of the earphone.

Moti Margalit [00:52:23]:

And the announcement that we made is that we actually have a very close cooperation with one of companies making these brains. And that enables us to embed some of our code into that brains of the earphone. What does that give? It actually, when we look at the system perspective, by doing that, we are moving some of the processing from our chip, which is not optimized for processing, to a chip which is well optimized to processing. So we achieve performance gains on power reduction, we reduce the power, the overall system power, and we remove a very important aspect which is latency. That means how long it takes for a signal to move through the audio chain. Latency is the critical aspect which means how good you can make noise cancellation. Because when you cancel noise, you're really having a feedback loop. Smaller latency, much better noise cancellation, broader bandwidth without all the artifacts.

Moti Margalit [00:53:35]:

So by having this partnership in place, we can then offer to our customers, the earphone manufacturers, a much better solution, less power, better, lower latency, better noise cancellation, and some other features. So that was the partnership that we announced. Unfortunately, I can't share the name of that partner at present, but the goal is to basically build up an ecosystem that supports modulated ultrasound in the most native way. And by doing that, we ensure that modulated ultrasounds are not only competitive, but they can actually offer much better performance across additional parameters compared to a standard speaker. So if you look at what's the advantage of our speakers, first and foremost, they're smaller. That means you can make totally different earphones, something that actually fits into the ear much more comfortable because the acoustic interface can be much smaller. People complain that earphones are too large. They don't fit well into the ear canal.

Moti Margalit [00:54:45]:

That completely goes away with our speaker. Our speaker can work through very narrow acoustic channels and it's much more comfortable fit into the ear. Sound is much better. We have better low frequencies, we have better high frequencies. So a single speaker actually gives you a premium sound quality, a full-range speaker. And then we don't have any vibrations, so that makes it both more comfortable but also has benefits on the assembly of the speaker into the earphone, the isolation of the speaker from the microphones and other components. In terms of power, we are competitive with the existing earphones. But then when we offload the power to this other— the brains of the system, we can actually be better than a comparable speaker.

Moti Margalit [00:55:40]:

In the same essence, when we offload that, we have better latency, we have better noise cancellation performance, and we have a tighter integration of the system and ultimately better performance across many parameters.

Scott Wilkinson [00:55:58]:

Well, that all sounds fabulous. My final question is, when are we going to see product? When can consumers buy such a thing?

Moti Margalit [00:56:07]:

So that's an excellent question, and the simple answer is that when you look at our sales cycle, our customers require 6 to 12 months from the introduction of our speakers until they have earphones with our speakers out in the market. I would expect by the second half of '27 you'll see earphones in the market with our speakers.

Scott Wilkinson [00:56:36]:

Well, I certainly look forward to that. This is a fascinating technology, and I really appreciate you being on the show to help explain it to us. Thanks so much.

Moti Margalit [00:56:48]:

Thank you very much for having me. It was a pleasure.

Scott Wilkinson [00:56:50]:

Yeah, great. So if you want more information about the, uh, Sonic Edge MEMS speaker, you can go to their website sonicedge.io, and, uh, there's a lot of great information there where you can learn more about this MEMS speaker technology. Modi, thanks again so much for being here.

Moti Margalit [00:57:21]:

Thank you very much for having me. Me.

Scott Wilkinson [00:57:24]:

Now, if you have a question for me, send it along to htg@twit.tv, and I'll answer as many as I can right here on the show. And if you have a home theater that you're proud of, send me some pics. Maybe we'll feature it right here on Home Theater Geeks. Until next time, geek out!